Subscribe to P2P-economy

Stay up to date! Get all the latest & greatest posts delivered straight to your inbox

Subscribe

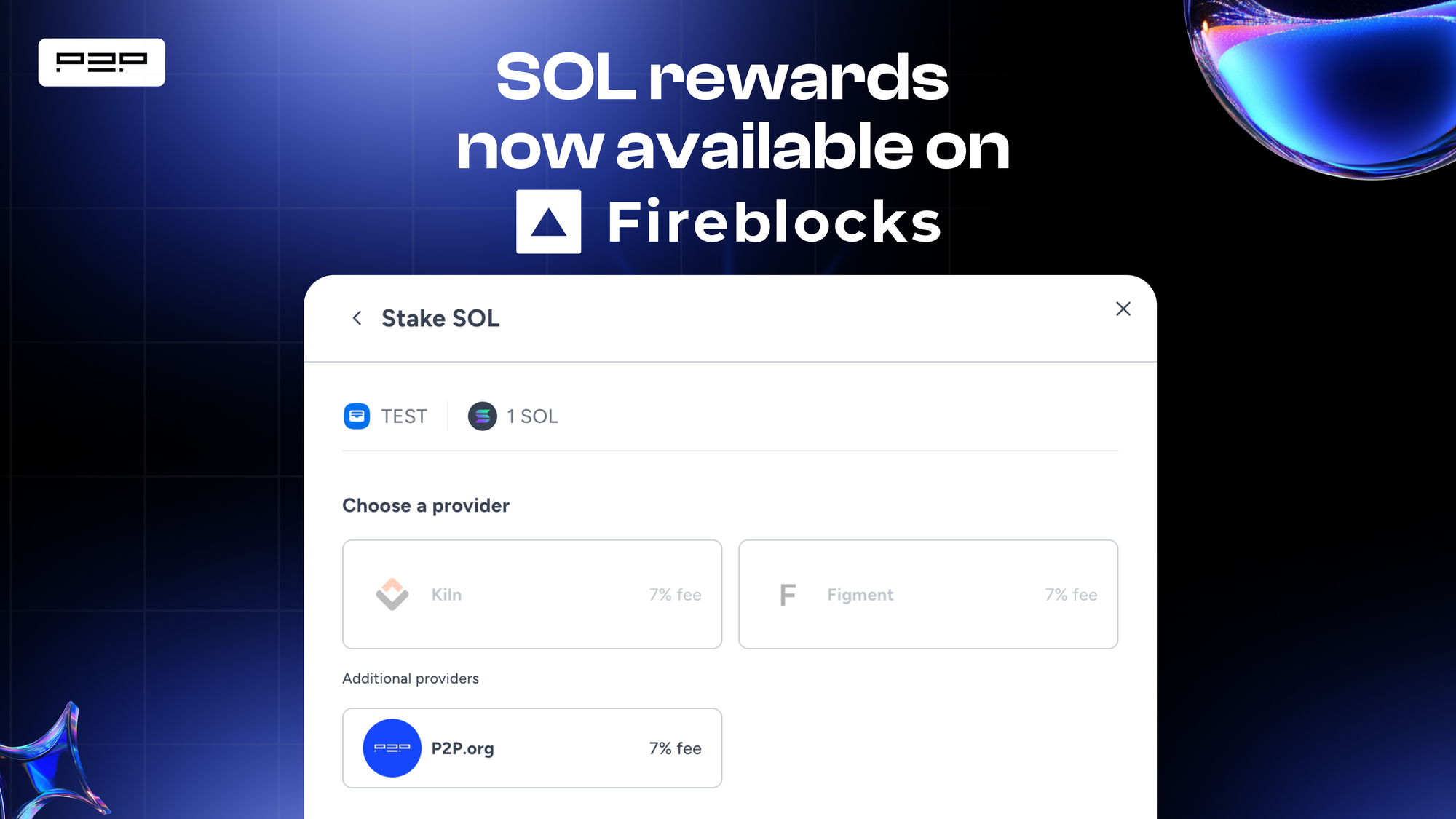

We’re thrilled to announce a major achievement for institutional staking: P2P.org is now officially available as a Solana validator on Fireblocks—the industry's leading digital asset infrastructure platform trusted by over 2,000 institutions worldwide. This integration represents months of work and positions P2P.org at the forefront of institutional Solana staking.

Finally, Fireblocks clients gain access to P2P.org’s market-leading Solana rewards, enabling institutions to receive Solana Rewards.

By staking with P2P.org through Fireblocks, users now finally get direct access to our reward ecosystem. This multi-layered reward structure maximizes network reward potential through three distinct revenue streams:

This comprehensive market-leading approach captures value from every aspect of Solana's reward structure, delivering a substantial advantage to Fireblocks clients over standard validation services. All of this is achieved while maintaining the institutional level of security and compliance framework that institutions require.

As part of Fireblocks' strategic expansion of staking capabilities, the platform has integrated P2P.org, giving its clients direct access to one of the highest-performing Solana validators. This integration delivers immediate benefits:

Superior Rewards, Zero Complexity: Fireblocks users can now access P2P.org's industry-leading 9.40% total gross rewards, outperforming the network average of 9.16%—without ever leaving their secure Fireblocks environment.

Institutional-Grade Infrastructure: Stake with confidence knowing your assets are backed by P2P.org's enterprise validation technology and Fireblocks' unparalleled security architecture.

Streamlined Experience: Fireblocks' enhanced validator selection interface makes it simple to compare providers and make informed staking decisions in just a few clicks.

As one of the first validators available for Solana on Fireblocks, P2P.org gives institutions immediate access to elite staking infrastructure without the traditional technical barriers. Our solution combines years of validator expertise, enterprise-grade performance, and the credibility of managing $10B+ across 40+ networks—delivering a significant competitive edge to early adopters in the institutional Solana ecosystem.

Our advanced validator technology maximizes blockspace efficiency that delivers a measurable financial impact:

This integration enables institutions to select preferred validators without sacrificing security, compliance, or rewards, allowing for strategic portfolio diversification and reduced exposure to single-provider risks. The addition of further staking providers on Solana also contributes to decentralization and Solana network resilience.

If you’re looking for special offers, please reach out to your personal Fireblocks account manager.

Comprehensive documentation is available through Fireblocks Staking Partners documentation.

While Fireblocks plans to expand its validator roster over time, P2P.org is fully integrated and available today. We're committed to building more seamless integration points that make institutional staking accessible, secure, and profitable across all major networks.

Ready to experience institutional-grade Solana staking? Visit p2p.org/solana or get in touch directly with us here.

<p>Zero-knowledge (ZK) proof systems are becoming a cornerstone technology for privacy-preserving and scalable computation in blockchain and cryptographic applications. As proof complexity and throughput demands grow, optimizing hardware utilization becomes essential to maintain performance and cost-efficiency — particularly in GPU-accelerated proving pipelines.</p><p>We at <a href="http://p2p.org/?ref=p2p.org"><u>P2P.org</u></a> have participated in most of the major ZK protocols via different sets of ZK prover hardware. Since Ethereum is moving towards ZK enshrined in the protocol with L2Beat-like “slices” overview projects popping up (https://ethproofs.org/), we wanted to provide the community with an example of one of our researches based on our gathered knowledge on the subject.</p><p>This study examines GPU utilization strategies for generating ZK proofs, comparing two leading GPU architectures: the <strong>NVIDIA H100</strong> and <strong>L40S</strong>. The main objective is to evaluate whether allocating multiple GPUs to a <em>single</em> proof improves performance more effectively than generating <em>multiple proofs in parallel</em>, each using a single GPU.</p><p>Our benchmark is based on Scroll’s open-source ZK prover implementation, deployed on two high-performance hardware platforms. Below are the technical specifications for each setup:</p><h3 id="hardware-specifications"><strong>Hardware Specifications</strong></h3><ul><li><strong>L40S System:</strong><ul><li>CPU: 2× AMD EPYC 9354 (3.80 GHz)</li><li>RAM: 2 TB</li><li>GPU: 8× NVIDIA L40S 48 GB</li><li>Storage: 4× 4 TB NVMe SSD</li><li>Network: 2× 10 Gbit NICs</li></ul></li><li><strong>H100 System:</strong><ul><li>CPU: Intel Xeon 8481C (2.7 GHz, 208 cores)</li><li>RAM: 1.8 TB</li><li>GPU: 8× NVIDIA H100 80 GB</li><li>Storage: 12× 400 GB NVMe SSD</li><li>Network: 1× 100 Gbit NIC</li></ul></li></ul><p>Using a fixed 8-GPU configuration, we tested two modes: (1) increasing the number of GPUs per proof to measure time reduction, and (2) running multiple proofs concurrently to assess total throughput. This section sets the foundation for analyzing the performance trade-offs, CPU/GPU bottlenecks, and real-world cost-effectiveness of ZK proof generation at scale.</p> <!--kg-card-begin: html--> <section id="experiment"> <h2>Benchmarking ZK Prover Performance: Parallelization vs Dedicated GPUs</h2> <p> To evaluate GPU utilization efficiency in zero-knowledge proof generation, we conducted a series of controlled benchmarks on both hardware setups — L40S and H100 — using 8 GPUs in each case. The goal was to compare two strategies: </p> <ul> <li><strong>Strategy A:</strong> Increasing the number of GPUs used for generating a single proof.</li> <li><strong>Strategy B:</strong> Running multiple proofs in parallel, with one GPU assigned per proof.</li> </ul> <p> The Scroll open-source prover was used as the testing framework across both systems. Each configuration was run with fixed parameters and measured for prover time, proof throughput (proofs per day), and system resource utilization (CPU, GPU memory, RAM). Below are the summarized results: </p> <h3>L40S Results</h3> <table border="1" cellpadding="8" cellspacing="0"> <thead> <tr> <th>Configuration</th> <th>Prover Time (s)</th> <th>Proofs per Day</th> </tr> </thead> <tbody> <tr> <td>1 GPU on 1 proof</td> <td>792</td> <td>109</td> </tr> <tr> <td>2 GPUs on 1 proof</td> <td>705</td> <td>122</td> </tr> <tr> <td>4 GPUs on 1 proof</td> <td>672</td> <td>128</td> </tr> <tr> <td>8 GPUs on 1 proof</td> <td>688</td> <td>125</td> </tr> <tr> <td>8 GPUs, 8 proofs in parallel</td> <td>1420 (total), 60.8 per GPU</td> <td>486 total</td> </tr> </tbody> </table> <h3>H100 Results</h3> <table border="1" cellpadding="8" cellspacing="0"> <thead> <tr> <th>Configuration</th> <th>Prover Time (s)</th> <th>Proofs per Day</th> </tr> </thead> <tbody> <tr> <td>1 GPU on 1 proof</td> <td>1047</td> <td>82</td> </tr> <tr> <td>2 GPUs on 1 proof</td> <td>892</td> <td>97</td> </tr> <tr> <td>4 GPUs on 1 proof</td> <td>824</td> <td>105</td> </tr> <tr> <td>8 GPUs on 1 proof</td> <td>803</td> <td>108</td> </tr> <tr> <td>8 GPUs, 8 proofs in parallel</td> <td>2400 (total), 36 per GPU</td> <td>288 total</td> </tr> </tbody> </table> <p> These results demonstrate that assigning a single GPU to each proof and executing them in parallel yields significantly higher overall throughput, especially on the L40S system. Surprisingly, H100 performance gains from parallelization were underwhelming, despite its raw power advantage, suggesting suboptimal software utilization or architectural bottlenecks in the current prover setup. </p> <p>On the graph we have shown the efficiency we expected to have by adding GPUs with the green line. The red dot on the graph is the generation of 8 ZK proofs simultaneously on the same 8-GPU unit, while the blue line is the result we received by adding GPUs to the proof generation process.</p> </section> <!--kg-card-end: html--> <h2 id=""></h2><figure class="kg-card kg-image-card"><img src="https://lh7-rt.googleusercontent.com/docsz/AD_4nXcoVS1Xsgm1Lq97e3t799HETaxKKGMdmtPpq7uiVSZ3UJY6GjBlpVk5ywKtq6O-k-O6XFB_4o8cM7Qdp5qozTAVkqlFxl1ixvrS6TWXCZf44CFSzmW_MZgZjsmyijWedj_ds_sP?key=-01FgYzuJbeNBpcm1OIScA" class="kg-image" alt="" loading="lazy" width="1600" height="954"></figure><h2 id="system-resource-utilization-during-proof-generation"><strong>System Resource Utilization During Proof Generation</strong></h2><p>In addition to measuring prover time and throughput, we monitored system-level resource usage to better understand the efficiency and scaling behavior of each GPU configuration. Metrics recorded include peak CPU utilization, maximum GPU memory usage, and RAM consumption across different levels of parallelism.</p><h3 id="l40sresource-metrics"><strong>L40S - Resource Metrics</strong></h3><ul><li><strong>1 GPU on 1 proof:</strong> 672s — CPU: 45%, GPU Memory: 24 GB, RAM: 180 GB</li><li><strong>2 GPUs on 1 proof:</strong> 672s — CPU: 60%, GPU Memory: 24 GB, RAM: 180 GB</li><li><strong>4 GPUs on 1 proof:</strong> 672s — CPU: 60%, GPU Memory: 24 GB, RAM: 180 GB</li><li><strong>8 GPUs on 1 proof:</strong> 688s — CPU: 45%, GPU Memory: 12 GB, RAM: 180 GB</li><li><strong>8 GPUs on 8 proofs (parallel):</strong> 1420s — CPU: 100%, GPU Memory: 24 GB, RAM: 1300 GB</li></ul><h3 id="h100resource-metrics"><strong>H100 - Resource Metrics</strong></h3><ul><li><strong>1 GPU on 1 proof:</strong> 1047s — CPU: 45%, GPU Memory: 46 GB, RAM: 180 GB</li><li><strong>2 GPUs on 1 proof:</strong> 892s — CPU: 60%, GPU Memory: 46 GB, RAM: 180 GB</li><li><strong>4 GPUs on 1 proof:</strong> 824s — CPU: 60%, GPU Memory: 24 GB, RAM: 180 GB</li><li><strong>8 GPUs on 1 proof:</strong> 803s — CPU: 60%, GPU Memory: 12 GB, RAM: 180 GB</li><li><strong>8 GPUs on 8 proofs (parallel):</strong> 2400s — CPU: 100%, GPU Memory: 46 GB, RAM: 1300 GB</li></ul><p>The results indicate that running proofs in parallel leads to near full CPU saturation and significantly increased RAM consumption. This suggests that CPU becomes a limiting factor under heavy GPU parallelism unless paired with a properly scaled memory and compute environment.</p><p>While GPU memory usage scales linearly with the number of concurrent proofs, the per-proof RAM usage becomes substantial when 8 parallel jobs are running, particularly on H100 hardware.</p><figure class="kg-card kg-image-card"><img src="https://lh7-rt.googleusercontent.com/docsz/AD_4nXfEU47ZweGZ8HObSGwZGOiSyfHcc8nLrFFwCuUFLXpvJVczCMo3ZW3xa4gbR-hIpRlZ84lN26DjwGlvGRdU24Oz82T0ZoeTsbn3vaJfO6zFLDxMyKEPmxKNa18WEDTow6mv3Z3FVg?key=-01FgYzuJbeNBpcm1OIScA" class="kg-image" alt="" loading="lazy" width="1580" height="980"></figure><p>The RAM usage remains constant at <strong>180 GB</strong> across all configurations (1, 2, 4, and 8 GPUs). This suggests that the memory allocation for the proof generation process is not dependent on the number of GPUs involved.</p><p>It is likely that the proving software either <strong>preallocates the required system memory</strong> at the start of the process or that the <strong>computational workload is primarily offloaded to the GPU</strong>, resulting in negligible variation in RAM consumption.</p><p>This behavior indicates that <strong>system RAM is not a limiting factor</strong> in the scaling of proof generation on the H100 hardware — at least when generating a single proof, regardless of GPU count.</p><figure class="kg-card kg-image-card"><img src="https://lh7-rt.googleusercontent.com/docsz/AD_4nXeCAFQTGwDsBk0j9joRf-E6y1-yTtW9JfwQmjqVto88GnYU5W9g1ctGUR5sJq7uX2I_qBsTGnR6T6fUpghOFvMQE-uA1nwh5Pnuet68ef2mtyCQMVswpY-oxQsVsZQU5qe8xJIrEQ?key=-01FgYzuJbeNBpcm1OIScA" class="kg-image" alt="" loading="lazy" width="1576" height="980"></figure><p>When analyzing GPU memory usage on the H100 for single-proof generation, a clear trend emerges: <strong>GPU memory consumption decreases as more GPUs are allocated to the task</strong>.</p><p>With 1 GPU, the memory usage peaks at <strong>46 GB</strong>, but as the workload is distributed across 2, 4, and eventually 8 GPUs, the consumption per GPU drops to <strong>12 GB</strong> in the 8-GPU configuration.</p><p>This behavior is consistent with the expectation that dividing the computation across more GPUs reduces per-device memory pressure, as intermediate states and computational graphs are split and processed concurrently.</p><p>However, despite the lower memory usage, the overall proving time did not improve significantly, suggesting that GPU memory was not the bottleneck. This reinforces the observation that <strong>parallel GPU allocation alone is not sufficient to accelerate ZK proof generation</strong> without corresponding improvements in software or CPU coordination.</p><h2 id="conclusion"><strong>Conclusion</strong></h2><p>This benchmark study evaluated the performance and hardware efficiency of generating zero-knowledge proofs using two enterprise-grade GPU configurations: the <strong>NVIDIA H100</strong> and <strong>NVIDIA L40S</strong>. The analysis was conducted using Scroll's open-source prover, with a focus on two key strategies: scaling a single proof across multiple GPUs versus running multiple proofs in parallel.</p><p>The results demonstrate that <strong>parallel generation of proofs using individual GPUs</strong> yields significantly better throughput than assigning all GPUs to a single proof process. This effect is especially visible on the L40S platform, where parallel execution nearly quadrupled the number of proofs generated per day compared to the single-proof setup.</p><p>Surprisingly, the H100 — despite its superior hardware specs — underperformed in this scenario. Its single-proof generation times were longer than L40S in all configurations, and parallel execution on H100 also delivered lower throughput, indicating that software bottlenecks or suboptimal utilization patterns may limit its current viability for ZK workloads.</p><p>Additionally, we found that <strong>system RAM and GPU memory were not primary limiting factors</strong> in most configurations. RAM usage remained constant during single-proof runs, while GPU memory usage decreased as GPU count increased. Instead, CPU saturation and parallel processing coordination appear to be more critical for maximizing performance in proof generation.</p><p>In conclusion, <strong>GPU parallelism for a single proof does not scale efficiently</strong> beyond a certain point. ZK infrastructure teams aiming to improve throughput should prioritize software optimization, better CPU/GPU coordination, and parallelization across proofs rather than within a single one.</p>

from p2p validator

<p>At <a href="http://p2p.org/?ref=p2p.org" rel="noopener noreferrer">P2P.org</a>, we champion blockchain projects driving innovation, and Sahara AI’s blend of AI and blockchain is one of the most exciting we’ve seen. By bringing transparency, ownership, and fairness to the heart of AI development, Sahara AI is laying the foundation for a decentralized AI future.</p><p>As a validator in the SIWA testnet, we’re helping build an ecosystem empowering developers, creators — and most importantly, regular users — in their transition to an AI-powered future.</p><p>In this post, we’ll explore Sahara AI’s bold vision, its product suite, technical breakthroughs, testnet progress, and what’s coming next. We also break down where P2P.org fits in and why we’re fired up to be part of it.</p><h2 id="the-challenge-centralized-ai-roadblocks">The Challenge: Centralized AI Roadblocks</h2><p>Centralized AI systems, controlled by tech giants, limit access to data and computing resources, obscure data origins, and restrict fair rewards for contributors. Developers struggle to verify datasets, while users question AI reliability due to unclear processes. Sahara AI plans to tackle these, enabling transparent, accessible AI development for its community.</p><h2 id="big-bets-on-decentralized-ai">Big Bets on Decentralized AI</h2><figure class="kg-card kg-image-card"><img src="https://p2p.org/economy/content/images/2025/06/SIWA-graphic-1.png" class="kg-image" alt="" loading="lazy" width="1920" height="1080" srcset="https://p2p.org/economy/content/images/size/w600/2025/06/SIWA-graphic-1.png 600w, https://p2p.org/economy/content/images/size/w1000/2025/06/SIWA-graphic-1.png 1000w, https://p2p.org/economy/content/images/size/w1600/2025/06/SIWA-graphic-1.png 1600w, https://p2p.org/economy/content/images/2025/06/SIWA-graphic-1.png 1920w" sizes="(min-width: 720px) 720px"></figure><p>Sahara AI is already making serious waves. In August 2024, the company secured a massive $43 million funding round, led by heavyweights like Pantera Capital, YZi Labs (formerly Binance Labs), and Polychain Capital — with strategic backing from Samsung NEXT and Matrix Partners. This bold investment underscores surging confidence in Sahara AI’s decentralized AI vision. With industry veterans Sean Ren and Tyler Zhou (formerly of Binance Labs) at the helm, Sahara Labs is scaling up its global team, supercharging platform performance, and energizing its developer ecosystem. The goal? To democratize access to powerful AI tools and unlock new, secure ways for users around the world to monetize their AI assets.</p><h2 id="sahara-ai%E2%80%99s-solution-a-blockchain-purpose-built-for-ai">Sahara AI’s Solution: A Blockchain Purpose-Built for AI</h2><p><a href="https://saharalabs.ai/?ref=p2p.org" rel="noopener noreferrer">Sahara AI</a> is building the backbone for decentralized intelligence. Leveraging the Cosmos SDK for high-speed, low-cost transactions and full Ethereum Virtual Machine (EVM) compatibility for smart contract flexibility, the Sahara Blockchain was purpose-built with AI development in mind. It supports the full lifecycle of AI development by giving contributors and developers the infrastructure needed to register their AI assets, establish attribution, and enable transparent usage tracking across the ecosystem, no matter what chain they’re on. This creates the foundation for new models of monetization, licensing, and collaboration.</p><figure class="kg-card kg-image-card"><img src="https://p2p.org/economy/content/images/2025/06/SIWA-graphic-2.png" class="kg-image" alt="" loading="lazy" width="1292" height="431" srcset="https://p2p.org/economy/content/images/size/w600/2025/06/SIWA-graphic-2.png 600w, https://p2p.org/economy/content/images/size/w1000/2025/06/SIWA-graphic-2.png 1000w, https://p2p.org/economy/content/images/2025/06/SIWA-graphic-2.png 1292w" sizes="(min-width: 720px) 720px"></figure><h3 id="key-technical-features">Key Technical Features</h3><ul><li><strong>On-Chain AI Lifecycle Management:</strong> A suite of AI-native smart contracts designed to bring structure, transparency, and enforceability to every stage of AI development. These protocols enable on-chain asset ownership, provenance tracking, and monetization.</li><li><strong>Off-Chain AI Execution Protocols:</strong> Sahara's off-chain infrastructure powers how AI agents are created, deployed, and run. They provide seamless access to models, databases, and tools, manage automatic agent execution with custom settings, and track usage for transparency and performance. Teams can collaborate on agents and models while retaining control of their assets. To ensure trust, Trusted Execution Environments generate verifiable proofs of each run, which are anchored on-chain.</li><li><strong>Chain Agnostic Infrastructure:</strong> Whether you're just getting started or scaling a production-ready agent, you should be able to tap into Sahara’s infrastructure at any stage of the AI development lifecycle, without having to switch ecosystems or abandon your community.</li></ul><h2 id="how-sahara-ai-products-power-a-decentralized-economy">How Sahara AI Products Power a Decentralized Economy</h2><p>Sahara AI is building a powerful suite of products to democratize AI development, ensuring secure, transparent, and equitable access for everyone. In this publication we’ll focus on the Application Layer in particular.</p><figure class="kg-card kg-image-card"><img src="https://p2p.org/economy/content/images/2025/06/SIWA-graphic-3.png" class="kg-image" alt="" loading="lazy" width="2000" height="1125" srcset="https://p2p.org/economy/content/images/size/w600/2025/06/SIWA-graphic-3.png 600w, https://p2p.org/economy/content/images/size/w1000/2025/06/SIWA-graphic-3.png 1000w, https://p2p.org/economy/content/images/size/w1600/2025/06/SIWA-graphic-3.png 1600w, https://p2p.org/economy/content/images/size/w2400/2025/06/SIWA-graphic-3.png 2400w" sizes="(min-width: 720px) 720px"></figure><ul><li><a href="https://app.saharalabs.ai/?ref=p2p.org" rel="noopener noreferrer"><strong>Data Services Platform (DSP)</strong></a> turns user participation into real value. By completing tasks like data labeling, prompt creation, app screen recording, or offering expert input, users earn Sahara Points, fueling both personal rewards and ecosystem growth. Currently in Early Access (whitelisted), the Open Beta is set to launch soon after the SIWA Testnet.</li><li><a href="https://app.saharalabs.ai/developer-platform/main/explore?ref=p2p.org" rel="noopener noreferrer"><strong>AI Developer Platform (formerly AI Studio)</strong></a> is a full-stack environment for building, testing, and deploying AI models, agents, and pipelines. Developers can plug into a shared marketplace of assets or upload their own, configure workflows, and publish tokenized components, all while tracking performance and monetization. It’s live now on the SIWA Testnet with the first features related to AI dataset tokenization — <a href="https://app.saharalabs.ai/developer-platform/main/explore?ref=p2p.org" rel="noopener noreferrer">jump in here</a>. More features will unlock soon to deepen participation.</li><li><strong>AI Marketplace (coming soon)</strong> will be the go-to hub for buying, selling, and licensing AI assets, including datasets, models, and agents. With flexible licensing, secure ownership, and transparent attribution via the Sahara Blockchain, it will give creators a new way to monetize, and buyers a trusted source of modular, high-quality AI components, all with transparent usage tracking.</li></ul><p>Together, these products form the foundation of Sahara AI’s Application Layer. They empower users and developers alike to contribute, innovate, and earn within a secure, equitable, and decentralized AI economy.</p><h2 id="siwa-testnet-p2porg%E2%80%99s-validator-role">Siwa Testnet & P2P.org’s Validator Role</h2><p>Following the success of the private testnet, which saw over 1.4 million daily active accounts and 200,000+ users on the Data Services Platform, Sahara AI has launched SIWA, its first public testnet. SIWA represents a major milestone: bringing AI ownership and provenance fully on-chain, and taking a critical step toward a fairer, decentralized AI economy.</p><figure class="kg-card kg-image-card"><img src="https://p2p.org/economy/content/images/2025/06/SIWA-graphic-4.png" class="kg-image" alt="" loading="lazy" width="1920" height="1080" srcset="https://p2p.org/economy/content/images/size/w600/2025/06/SIWA-graphic-4.png 600w, https://p2p.org/economy/content/images/size/w1000/2025/06/SIWA-graphic-4.png 1000w, https://p2p.org/economy/content/images/size/w1600/2025/06/SIWA-graphic-4.png 1600w, https://p2p.org/economy/content/images/2025/06/SIWA-graphic-4.png 1920w" sizes="(min-width: 720px) 720px"></figure><p>SIWA already empowers participants to register and tokenize datasets, models, and AI assets as cryptographic proof of ownership, laying the groundwork for future licensing, attribution, and revenue sharing. This stage is extremely important for collecting feedback, refining details, and testing all aspects of the system to ensure a tamper-proof mainnet implementation.</p><p><a href="http://p2p.org/?ref=p2p.org" rel="noopener noreferrer"><strong>P2P.org</strong></a><strong> plays a key role as a validator on the SIWA testnet, helping to secure the network and maintain its integrity</strong>. Validators like <a href="http://p2p.org/?ref=p2p.org" rel="noopener noreferrer">P2P.org</a> ensure that transactions are processed accurately, that asset provenance remains tamper-proof, and that the system performs reliably at scale. Community participation is equally essential, as it helps surface potential issues and ensure robust performance. These early efforts are vital to shaping a secure and decentralized foundation for the Sahara AI Blockchain on the mainnet.</p><h2 id="what%E2%80%99s-next-the-road-to-mainnet">What’s Next: The Road to Mainnet</h2><p>SIWA Phase 1 is just the beginning. While it introduces foundational dataset registration and ownership, Sahara AI’s full protocol rollout will unlock deeper capabilities across licensing, monetization, and open-source development — paving the way for a fully decentralized AI stack.</p><figure class="kg-card kg-image-card"><img src="https://p2p.org/economy/content/images/2025/06/SIWA-graphic-5.png" class="kg-image" alt="" loading="lazy" width="1920" height="1080" srcset="https://p2p.org/economy/content/images/size/w600/2025/06/SIWA-graphic-5.png 600w, https://p2p.org/economy/content/images/size/w1000/2025/06/SIWA-graphic-5.png 1000w, https://p2p.org/economy/content/images/size/w1600/2025/06/SIWA-graphic-5.png 1600w, https://p2p.org/economy/content/images/2025/06/SIWA-graphic-5.png 1920w" sizes="(min-width: 720px) 720px"></figure><h3 id="phase-2-%E2%80%93-on-chain-licensing-revenue-distribution-royalty-vaults">Phase 2 – On-Chain Licensing, Revenue Distribution & Royalty Vaults</h3><p>Phase 2 will bring licensing and revenue sharing on-chain, allowing developers to set custom licensing terms and automatically receive payments when their assets are used. With royalty vaults, asset owners and investors will claim proportional revenue based on usage, turning attribution into sustainable economic participation for all contributors.</p><h3 id="phase-3-%E2%80%93-permissionless-testnet-with-open-source-protocols">Phase 3 – Permissionless Testnet with Open-Source Protocols</h3><p>This phase will fully decentralize the core infrastructure, eliminating reliance on a centralized multisig and enabling global, permissionless interaction with Sahara Protocols. An open-source ecosystem will allow developers to extend and integrate these protocols freely.</p><h3 id="phase-4-%E2%80%93-pipeline-registration-provenance-tracking-proof-of-contribution">Phase 4 – Pipeline Registration, Provenance Tracking & Proof-of-Contribution</h3><p>The final phase before mainnet will tokenize AI pipelines, providing on-chain registration and attribution for every workflow element. Contributors will earn tokens reflecting their relative value across datasets, models, and prompts, with automated revenue-sharing that recognizes and rewards every part of the AI value chain.</p><h2 id="why-sahara-ai-stands-out">Why Sahara AI Stands Out</h2><p>In a world where AI is growing at breakneck speed, Sahara AI stands out by putting <strong>real ownership and transparency at the center of development</strong>. While others focus only on technology, Sahara AI ensures data contributors and developers are recognized, compensated, and empowered within a truly decentralized ecosystem.</p><figure class="kg-card kg-image-card"><img src="https://p2p.org/economy/content/images/2025/06/SIWA-graphic-6.png" class="kg-image" alt="" loading="lazy" width="2000" height="1125" srcset="https://p2p.org/economy/content/images/size/w600/2025/06/SIWA-graphic-6.png 600w, https://p2p.org/economy/content/images/size/w1000/2025/06/SIWA-graphic-6.png 1000w, https://p2p.org/economy/content/images/size/w1600/2025/06/SIWA-graphic-6.png 1600w, https://p2p.org/economy/content/images/size/w2400/2025/06/SIWA-graphic-6.png 2400w" sizes="(min-width: 720px) 720px"></figure><p>Its fast-paced progress, backed by a well-funded team, and the support of leading industry players and AI investors, demonstrates a commitment to solving real challenges like data verification and equitable access. Beyond technical innovation, Sahara AI cultivates a vibrant community that actively shapes and refines its protocols.</p><p>The momentum is building. Developers, businesses, and creators are already tapping into Sahara’s tools to power new AI applications, unlock revenue streams, and build a more inclusive future for machine intelligence. With trusted validators like <a href="http://p2p.org/?ref=p2p.org" rel="noopener noreferrer">P2P.org</a> securing the network, Sahara AI isn’t just a blockchain or a platform — it’s a movement redefining how AI is built, owned, and shared in the Web3 era.</p><h2 id="join-the-sahara-ai-ecosystem">Join the Sahara AI Ecosystem</h2><p>The Sahara AI ecosystem is live and evolving, offering opportunities for developers, data contributors, and creators to help shape the future of AI.</p><p>Join the conversation and stay updated through Sahara AI’s <a href="https://saharalabs.ai/blog?ref=p2p.org" rel="noopener noreferrer">blog, </a><a href="https://discord.gg/CzY5Mpgrpd?ref=p2p.org" rel="noopener noreferrer">Discord</a>, and <a href="https://x.com/SaharaLabsAI?ref=p2p.org" rel="noopener noreferrer">X </a>channels. You can also join the SIWA public testnet to <a href="https://app.saharalabs.ai/developer-platform/main/explore?ref=p2p.org" rel="noopener noreferrer">register your datasets</a>, <a href="https://explorer.moonlet.cloud/sahara-testnet/staking/sahvaloper1hfg3kt2f2p09cv35r3jw44csc2cz8equ63yq38?ref=p2p.org" rel="noopener noreferrer">test how staking works</a>, and <a href="https://saharalabs.ai/?ref=p2p.org" rel="noopener noreferrer">contribute to building a decentralized and equitable AI economy</a>.</p>

from p2p validator